Data Residency in TrueFoundry AI Gateway

Introduction

AI systems are no longer passive tools. They are increasingly agentic - operating autonomously across workflows, APIs, and sensitive enterprise data. In traditional systems, data residency was defined by where data was stored. Once databases and storage lived in approved regions, compliance was considered solved.

Agentic AI breaks that model. Every interaction generates new data surfaces - prompts, agent memory, logs, traces, and transient inference data, that are processed and observed at runtime, often across regions, even when nothing is persisted.

As a result, data residency is no longer a compliance checkbox. It is a core infrastructure concern now discussed at the board level. The question enterprises must answer is simple: Where does AI-generated data move at runtime and who controls those paths?

In TrueFoundry, data residency is enforced at the AI Gateway, where inference, agents, and tools converge. Residency is treated as a system property, enforced under normal operation, failures, and scale. This blog explains how data residency is defined, enforced, and verified in the TrueFoundry AI Gateway.

Why Data Residency Is Harder in AI Systems

Data residency was simpler when applications had predictable data paths. Requests flowed from users to services to databases, usually within a single region, and compliance controls were largely static.

AI systems break this model at runtime.

In modern AI architectures, data movement is dynamic and decision-driven, not fixed. A single user request can trigger multiple execution paths, all orchestrated by the AI Gateway. This is where data residency becomes fragile.

At runtime, an AI Gateway may:

- Select a model based on availability, latency, or policy

- Retry a request if a model endpoint times out

- Fail over to an alternate endpoint during partial outages

- Invoke downstream tools or MCP servers as part of agent workflows

- Emit prompts, responses, and traces to observability pipelines

Each of these decisions can introduce implicit data movement, often without the application being aware of it.

The most common data residency failures in AI systems occur:

- During failover, when traffic is silently routed to another region

- During multi-model routing, when only some models are region-scoped

- Through agent-driven tool invocation, where tools live in different regions

- Through logs and telemetry, which are often exported by default

Critically, these failures happen even when:

- The application is deployed in-region

- The primary model is hosted locally

- Storage systems are region-restricted

Why the AI Gateway Becomes the Enforcement Point

These failures all have one thing in common: they occur at runtime, driven by routing, retries, agent execution, and logging behavior.

The AI Gateway is the only layer that:

- Sees every request before execution

- Controls model selection, retries, and failover

- Mediates agent and tool invocation

- Emits observability data

This is why data residency in AI systems cannot be guaranteed through deployment configuration alone. It must be enforced at the AI Gateway, where execution paths are decided in real time.

In platforms like TrueFoundry, residency is treated as a hard runtime constraint, not a best-effort preference ensuring that no execution path, including failure scenarios, can violate regional boundaries.

The New AI Data Liability: Prompts, Logs, and Transient Data

Agentic AI systems don’t just use data, they continuously generate new data surfaces at runtime. These surfaces did not exist in traditional applications, and they fundamentally change what data residency must account for.

In AI systems, data residency is no longer limited to data at rest. It extends to every piece of data created, processed, or observed during inference and agent execution, even if that data exists only briefly.

The most important of these new data liabilities are often the least visible.

Prompts and Agent State

Inference requests carry prompts and responses through the AI Gateway, frequently containing proprietary logic, customer data, or sensitive internal context. Unlike traditional APIs, this data is free-form and unsanitized, making it particularly high risk.

Agentic workflows introduce persistent context and memory across interactions. If this state is processed or replayed outside approved regions, residency is violated, even when individual inference calls appear compliant.

Logs, Telemetry, and Transient Data

AI systems also generate logs, traces, embeddings, and execution metadata that can encode sensitive information. If observability pipelines export this data across regions, violations occur silently.

Crucially, data does not need to be stored to be non-compliant. Transient inference data, processed only in memory for milliseconds, still falls under residency requirements if it crosses a jurisdictional boundary.

Why This Changes Residency Enforcement

Traditional residency controls were designed for static systems, not for dynamic routing, retries, failover, and agent-driven execution. In AI systems, residency must be enforced at runtime, where these data paths are created.

In platforms like TrueFoundry, this enforcement happens at the AI Gateway, where prompts, agent context, retries, and telemetry converge, making residency a system property rather than an assumption.

TrueFoundry Architecture: Where Data Residency Is Enforced

Enforcing data residency in AI systems requires more than regional deployment. It requires clear separation of responsibilities across the AI stack, so that execution, control, and data paths can be governed independently.

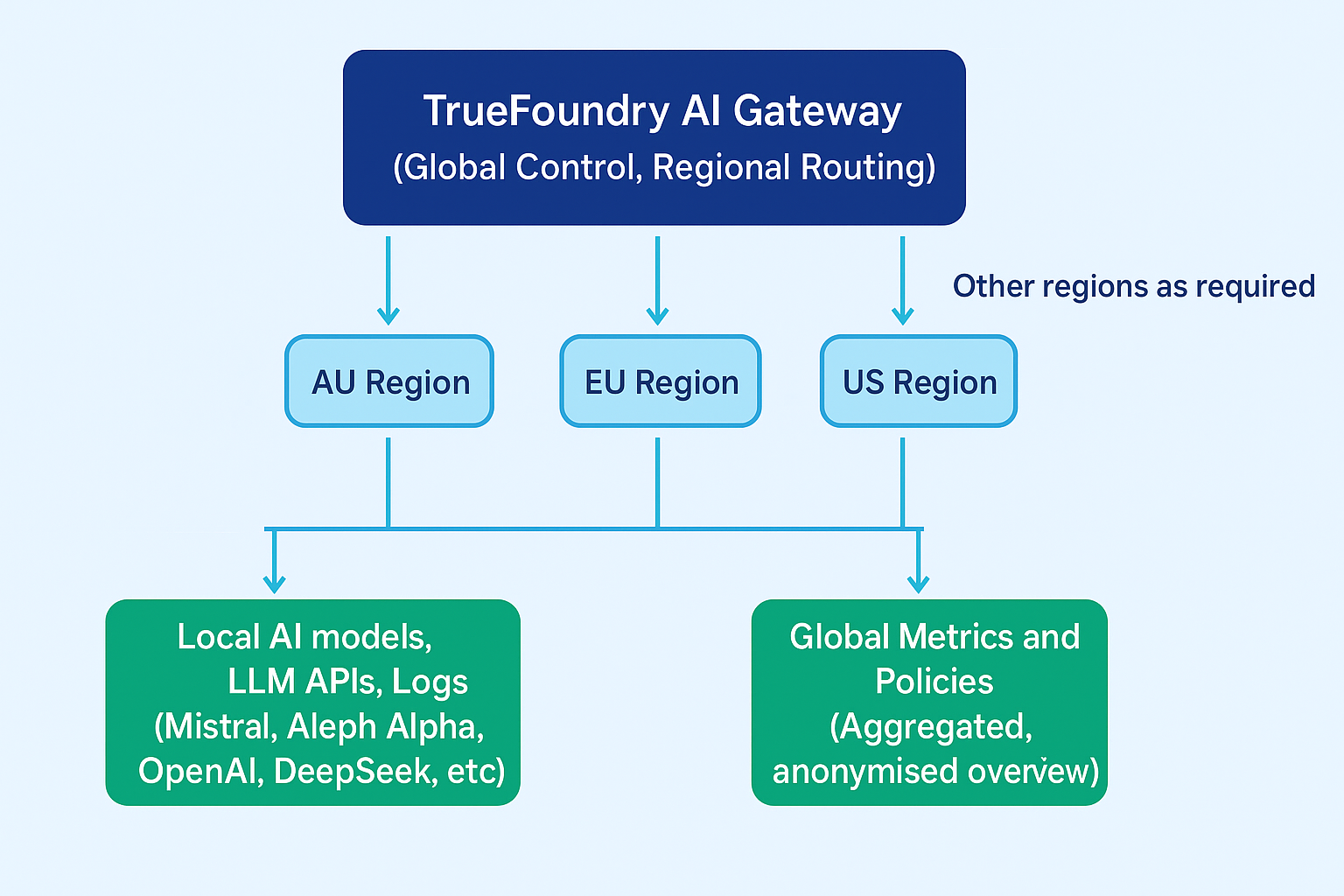

TrueFoundry is designed around a split-plane architecture that makes this possible.

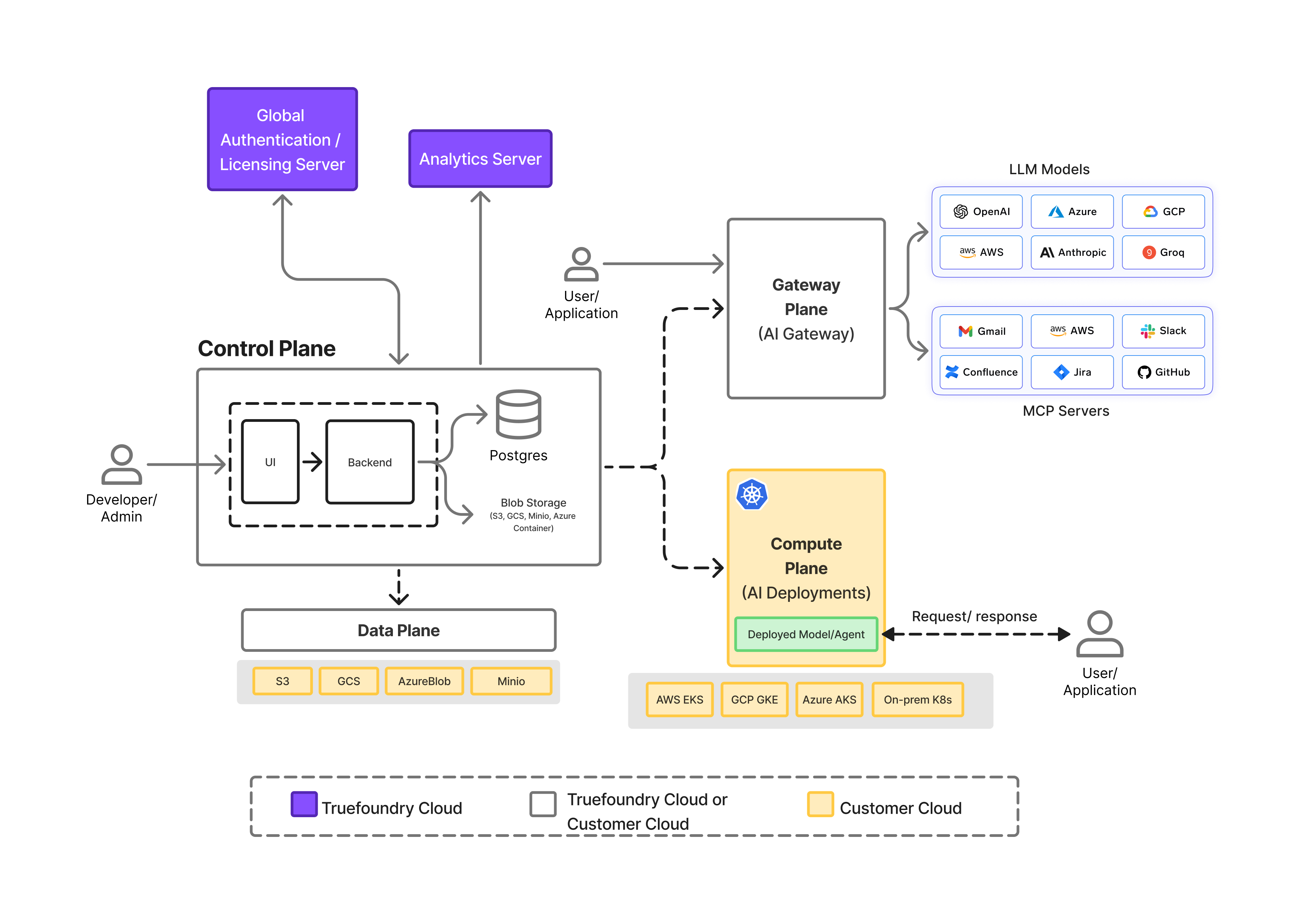

At a high level, the platform is composed of three distinct planes:

This separation is foundational to how data residency is enforced reliably at runtime.

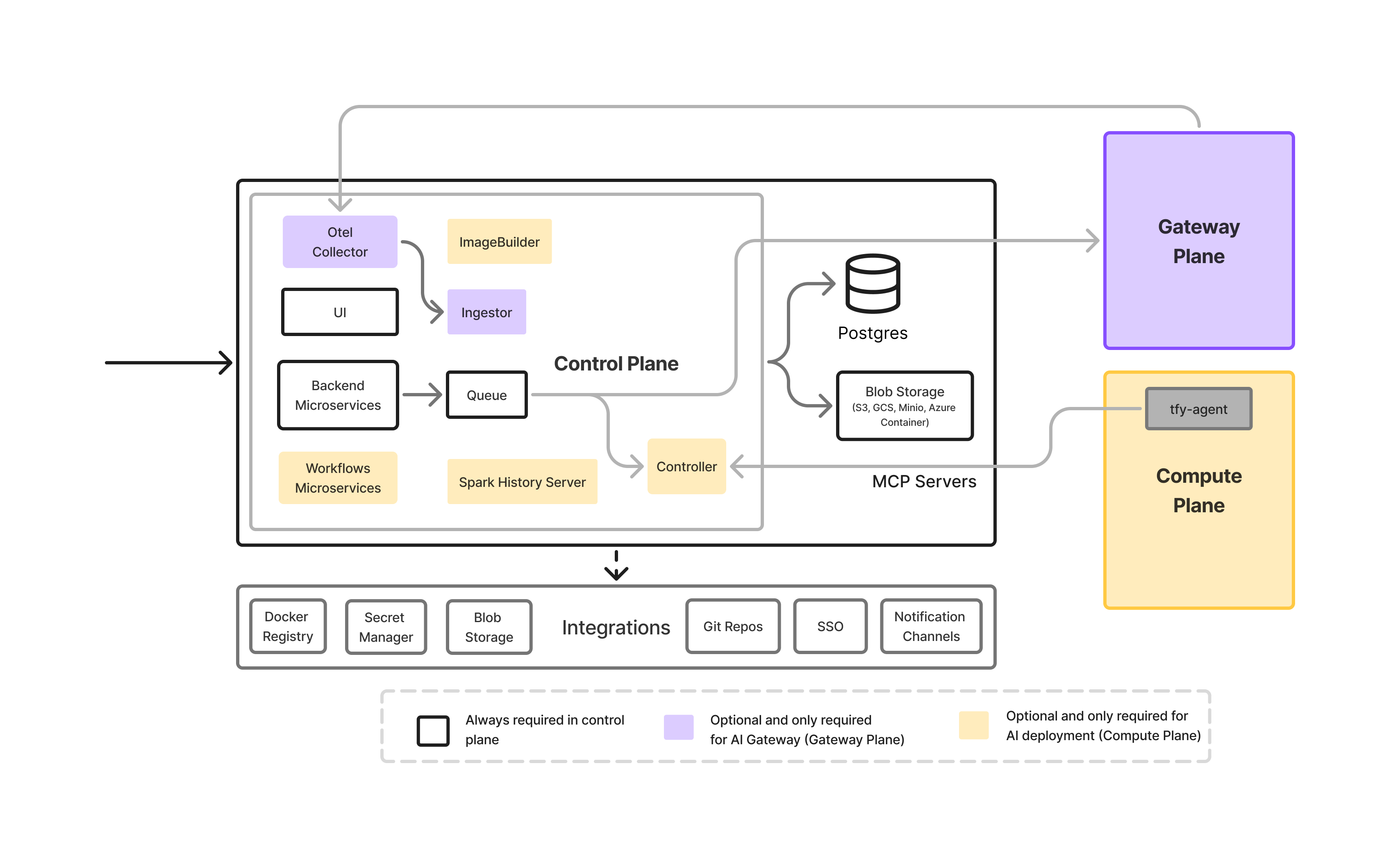

Control Plane: Configuration and Orchestration

The control plane is the orchestration layer of the TrueFoundry platform. It is responsible for:

- Managing platform configuration and policies

- Defining routing, residency, and access rules

- Coordinating gateway deployments across regions

- Managing metadata, configuration state, and governance settings

Critically, the control plane does not process inference traffic and does not execute workloads. It defines what should happen, not where data flows at runtime.

For enterprises with strict compliance requirements, TrueFoundry supports both:

- Hosted control plane deployments

- Self-hosted control plane deployments (enterprise option)

This allows organizations to choose the appropriate balance between operational simplicity and sovereignty requirements, without changing how residency enforcement works downstream.

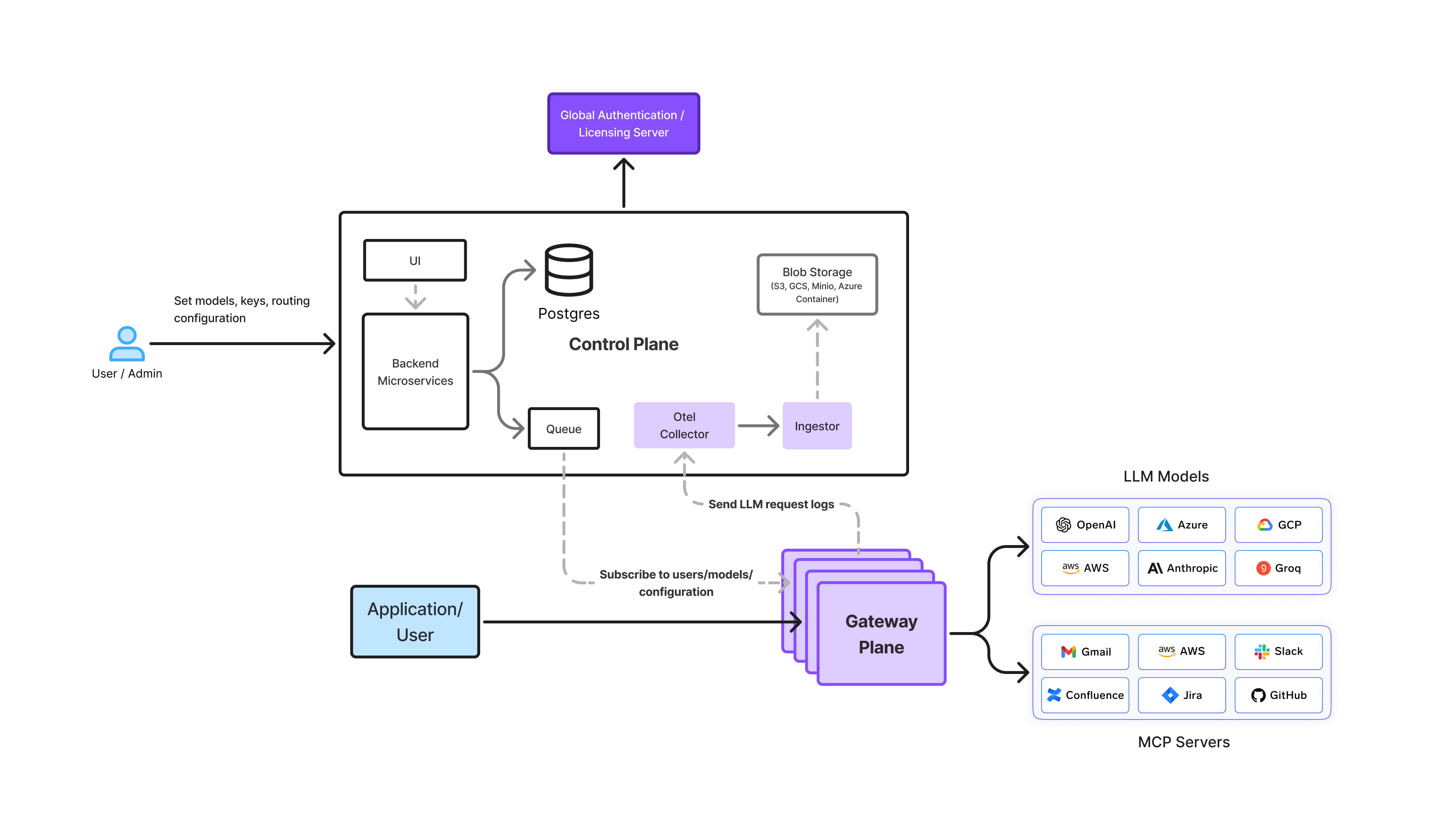

Gateway Plane: Runtime Enforcement Layer

The gateway plane is where data residency is actively enforced.

TrueFoundry AI Gateways sit between applications and all model providers, acting as:

- A traffic controller, deciding where requests are routed

- A compliance firewall, preventing non-compliant execution paths

- A policy enforcement point, applying residency rules at runtime

Every inference request, retry, failover, agent invocation, and observability event passes through the gateway. This gives it full visibility into:

- Model selection

- Routing and fallback decisions

- Agent and MCP tool execution

- Prompts, responses, and telemetry

Because of this, the gateway plane is the only layer capable of enforcing data residency as a hard constraint.

If a request cannot be satisfied within configured residency boundaries, the gateway fails the request closed rather than silently routing it to a non-compliant region.

This is the key difference between runtime enforcement and best-effort configuration.

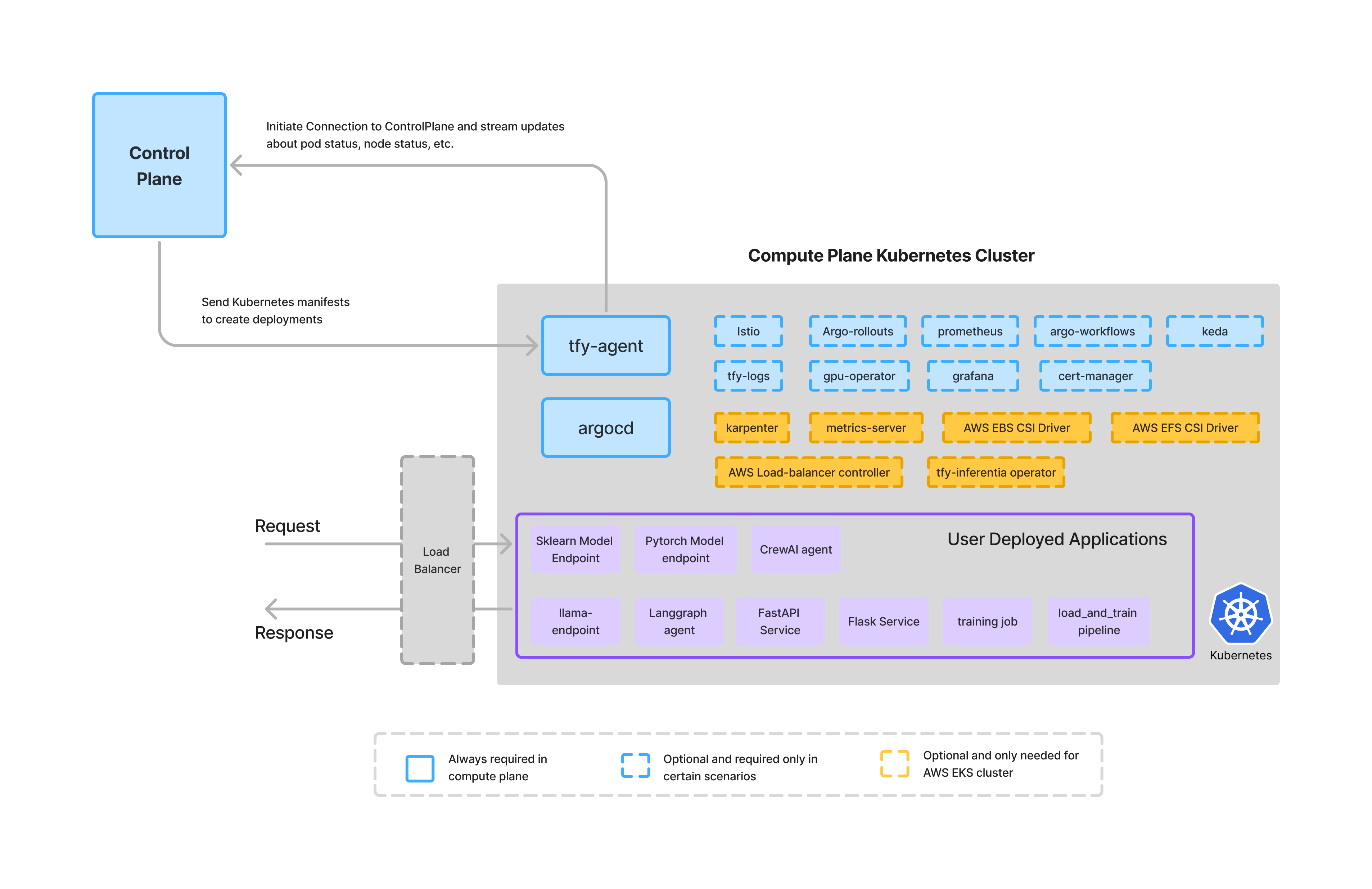

Compute Plane: Customer-Owned Execution Environment

The compute plane is where applications, agents, and workloads actually run.

In TrueFoundry, the compute plane:

- Always runs inside customer-owned infrastructure

- Is typically one or more Kubernetes clusters (EKS, GKE, AKS, OpenShift, or on-prem)

- Is never operated or accessed directly by TrueFoundry

This design ensures that:

- Application code never leaves the customer’s environment

- Inference requests originate from customer-controlled infrastructure

- Data residency guarantees are not undermined by shared execution environments

TrueFoundry does not execute customer workloads on shared compute. Instead, it integrates with the customer’s existing clusters or helps provision new ones, keeping execution firmly within the organization’s trust boundary.

Why This Architecture Matters for Data Residency

This separation of planes enables TrueFoundry to enforce data residency without compromise:

- Control plane defines residency policy

- Gateway plane enforces it at runtime

- Compute plane ensures execution stays within customer boundaries

Because enforcement happens at the gateway—where routing, retries, agents, and logs converge, data residency holds even under:

- Failures and retries

- Multi-model routing

- Agentic workflows

- High-volume observability

This is what allows data residency to become a system property, not an assumption tied to deployment diagrams.

How TrueFoundry Enforces Data Residency

Data residency in AI systems is not a single switch—it must be enforced across execution, routing, and storage. In TrueFoundry, this is achieved through three complementary enforcement modes that together cover the full lifecycle of AI data.

Each mode addresses a different class of residency risk and can be used independently or in combination, depending on enterprise requirements.

1. Data Never Leaves Your Environment

For organizations with the strictest residency and compliance needs, TrueFoundry enables a deployment model where data never leaves the customer’s environment.

In this mode:

- All application workloads run inside customer-owned Kubernetes clusters

- Models, artifacts, and inference traffic remain within the customer’s cloud account or on-prem environment

- No customer data is processed on shared compute owned by TrueFoundry

- Data egress to external systems can be fully eliminated

This applies across both:

- Self-hosted control plane deployments

- Managed control plane deployments, where customers still retain control over gateway region, storage, and execution boundaries

By ensuring that execution and data paths remain entirely within customer-controlled infrastructure, this mode provides the strongest possible residency guarantees and simplifies regulatory audits.

2. Data Constrained to a Specific Country or Region

Many enterprises need to operate globally while ensuring that data for a given geography never crosses jurisdictional boundaries.

TrueFoundry enforces this through region-specific AI Gateway deployments:

- Gateway endpoints are deployed in specific regions or countries

- Requests routed through a given gateway endpoint are processed only within that region

- Routing, retries, and failover paths are constrained to region-local infrastructure

Applications explicitly choose which regional gateway endpoint to use. This makes data residency:

- Explicit, not implicit

- Configurable per workload or environment

- Enforceable at runtime, not just at deployment

If no residency-compliant execution path exists for a request, the gateway fails the request closed rather than routing it to another region. This ensures that availability mechanisms never override compliance intent.

3. Region-Specific Storage per Workload

Inference and execution are only part of the data residency story. Logs, traces, prompts, and telemetry often carry equally sensitive information and must follow the same residency rules.

TrueFoundry allows enterprises to enforce residency at the storage layer by:

- Using region-specific tracing and logging projects

- Supporting customer-managed storage buckets deployed in specific regions

- Ensuring observability data is written only to approved regional storage

This makes it possible to:

- Store European data exclusively in EU regions

- Keep regulated workloads (e.g., ITAR, financial, healthcare) confined to national boundaries

- Isolate data across regions even within the same global deployment

Because these storage choices are integrated directly into the AI Gateway and SDK configuration, observability data follows the same residency guarantees as inference traffic.

Why These Three Modes Matter Together

Each enforcement mode solves a different problem:

- Environment-level isolation prevents uncontrolled data egress

- Region-level gateways constrain runtime execution paths

- Region-specific storage closes observability and logging gaps

Together, they ensure that data residency is enforced:

- Across inference, agents, and tools

- Across normal execution and failure scenarios

- Across data at rest and data in motion

This layered approach is what allows TrueFoundry to turn data residency from a best-effort configuration into a verifiable, runtime-enforced system property.

In TrueFoundry, data residency is enforced through multiple, explicit layers inside the AI Gateway, each addressing a different class of runtime risk.

These layers work together to ensure that residency guarantees hold under real-world conditions.

How Data Residency Is Enforced at Runtime in the TrueFoundry AI Gateway

In AI systems, data residency guarantees only hold if they are enforced at runtime, across every execution path not just during steady-state operation. In TrueFoundry, the AI Gateway is the enforcement point where routing decisions, retries, agent execution, and observability converge.

The following mechanisms explain how data residency is enforced deterministically inside the TrueFoundry AI Gateway.

Inference Routing & Model Residency

Models in TrueFoundry are registered with explicit region affinity. The AI Gateway evaluates residency constraints before routing any request and only selects model endpoints that are eligible for the workload’s allowed region.

This prevents:

- Accidental use of globally hosted or non-resident models

- Cross-region routing during load balancing

- Residency drift as new models are added or existing models are updated

Because residency is treated as a hard routing constraint, not a preference, non-compliant models are never considered—even if they are available or faster.

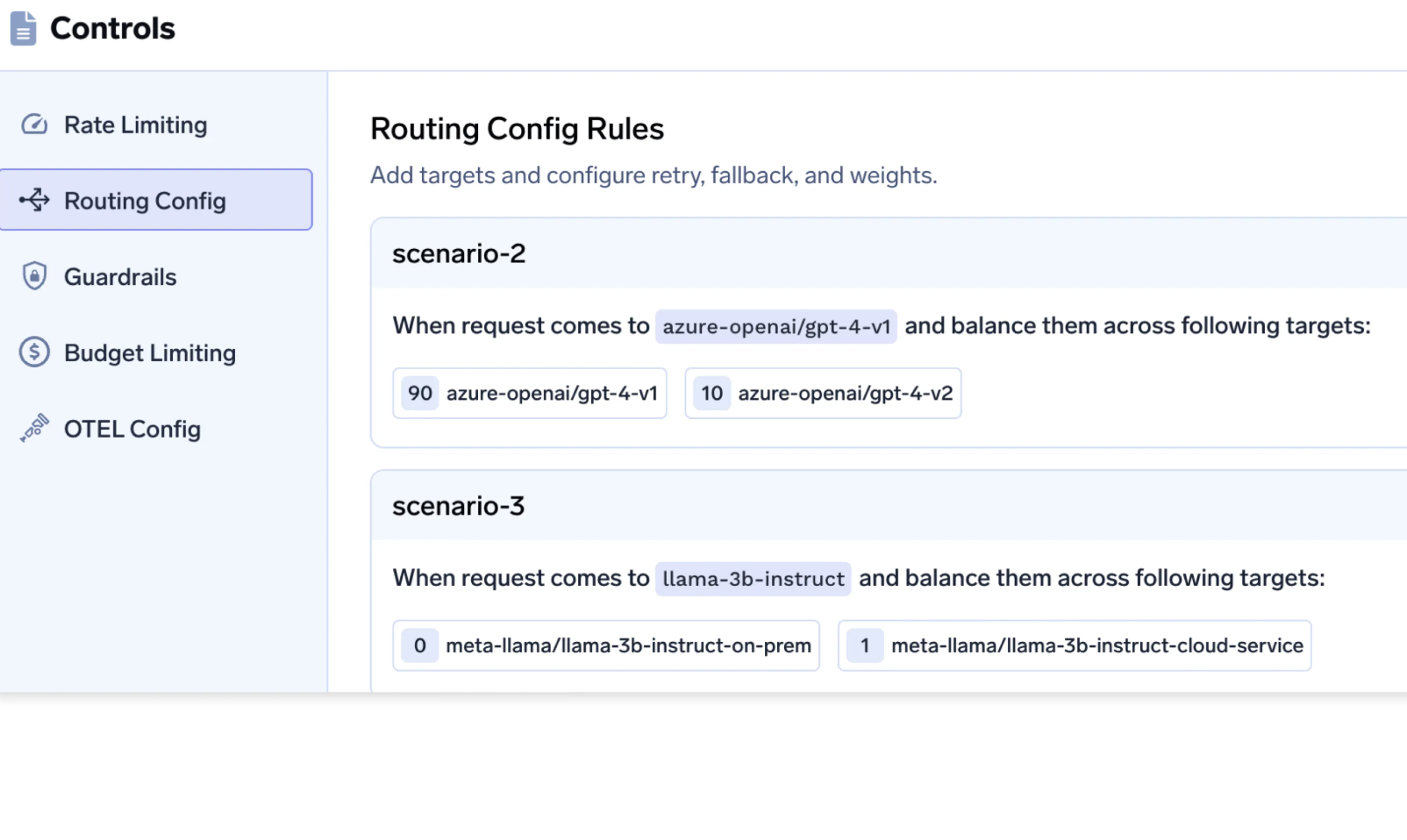

Retry, Failover & High Availability Controls

Retries and failover paths are the most common source of silent data residency violations in AI systems.

TrueFoundry’s AI Gateway enforces:

- Region-locked retry pools, ensuring retries never leave the allowed region

- Residency-aware failover, where fallback targets are constrained to the same jurisdiction

- Fail-closed behavior, where requests are rejected if no residency-compliant execution path exists

This ensures that availability mechanisms never override compliance intent. If a compliant path is unavailable, the system fails explicitly rather than routing data across regions.

Agent & MCP Tool Execution

For agentic workloads, data residency must remain consistent across model inference and downstream tool invocation.

TrueFoundry enforces:

- Region-scoped agent execution environments

- Prevention of cross-region MCP tool invocation

- Consistent residency policies across multi-step agent workflows

This eliminates a common failure mode where inference remains compliant, but agents leak data indirectly through tools or MCP servers deployed in other regions.

Observability, Logs & Telemetry

Observability pipelines are frequently overlooked in data residency designs, despite often containing highly sensitive data.

TrueFoundry’s AI Gateway ensures that:

- Prompts, responses, and traces can be stored in-region

- Telemetry export respects the same residency constraints as inference

- Debugging and monitoring paths do not leak data across regional boundaries

This closes one of the most persistent residency gaps in AI systems, where inference is compliant but logs and traces are not.

Why Runtime Enforcement Matters

These enforcement mechanisms apply uniformly across:

- Normal execution paths

- Retries and partial failures

- Multi-model routing

- Agentic and tool-driven workflows

Because enforcement happens before execution, data residency becomes a verifiable system property, not a best-effort configuration tied to infrastructure placement.

Common Data Residency Failure Scenarios and How TrueFoundry Prevents Them

Most data residency violations in AI systems are not caused by obvious misconfigurations. They emerge from edge cases and exception paths that are rarely tested until something goes wrong.

Below are the most common failure scenarios enterprises encounter and how the TrueFoundry AI Gateway is designed to prevent them.

Failure Scenario 1: Cross-Region Failover During Outages

What happens in many systems

A regional model endpoint becomes unavailable. The AI Gateway automatically retries or fails over to the next available endpoint often in another region.

From an availability standpoint, this looks like success.

From a compliance standpoint, it is a silent violation.

How TrueFoundry prevents this

- Failover targets are constrained to the same region

- Retry pools are region-locked

- If no compliant endpoint exists, the request fails closed

This ensures that availability mechanisms never override residency policy.

Failure Scenario 2: Partial Residency in Multi-Model Setups

What happens in many systems

Some models are deployed in-region, while others (often backups or newer models) are globally hosted. Routing policies unintentionally select non-resident models.

How TrueFoundry prevents this

- Models are registered with explicit region affinity

- Residency is enforced as a hard routing constraint

- Non-compliant models are never eligible for selection

This makes residency guarantees resilient to model churn and experimentation.

Failure Scenario 3: Agent-Driven Cross-Region Tool Invocation

What happens in many systems

Inference runs locally, but agents invoke tools or MCP servers deployed in other regions, creating indirect data movement.

How TrueFoundry prevents this

- Agent execution and MCP tool access are region-scoped

- Cross-region tool invocation is blocked at the gateway

- Residency policies apply uniformly across multi-step workflows

This keeps residency consistent across inference and downstream execution.

Failure Scenario 4: Observability and Telemetry Leakage

What happens in many systems

Prompts, responses, and traces are exported to centralized logging or monitoring services outside the region often by default.

How TrueFoundry prevents this

- Observability pipelines are residency-aware

- Telemetry export is explicitly configured and constrained

- Debugging paths respect the same residency rules as inference

This closes one of the most frequently overlooked compliance gaps in AI systems.

How Enterprises Can Verify Data Residency in TrueFoundry

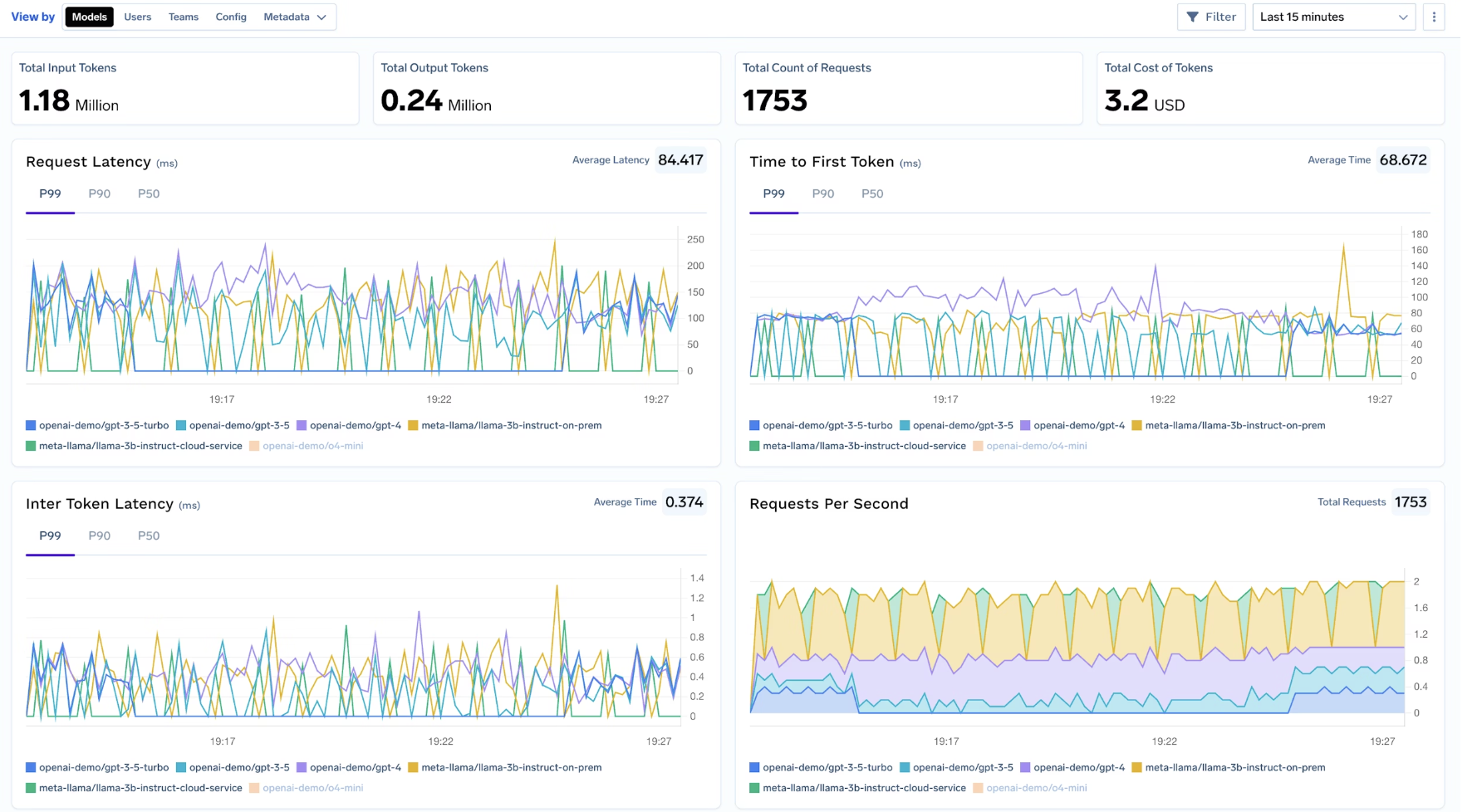

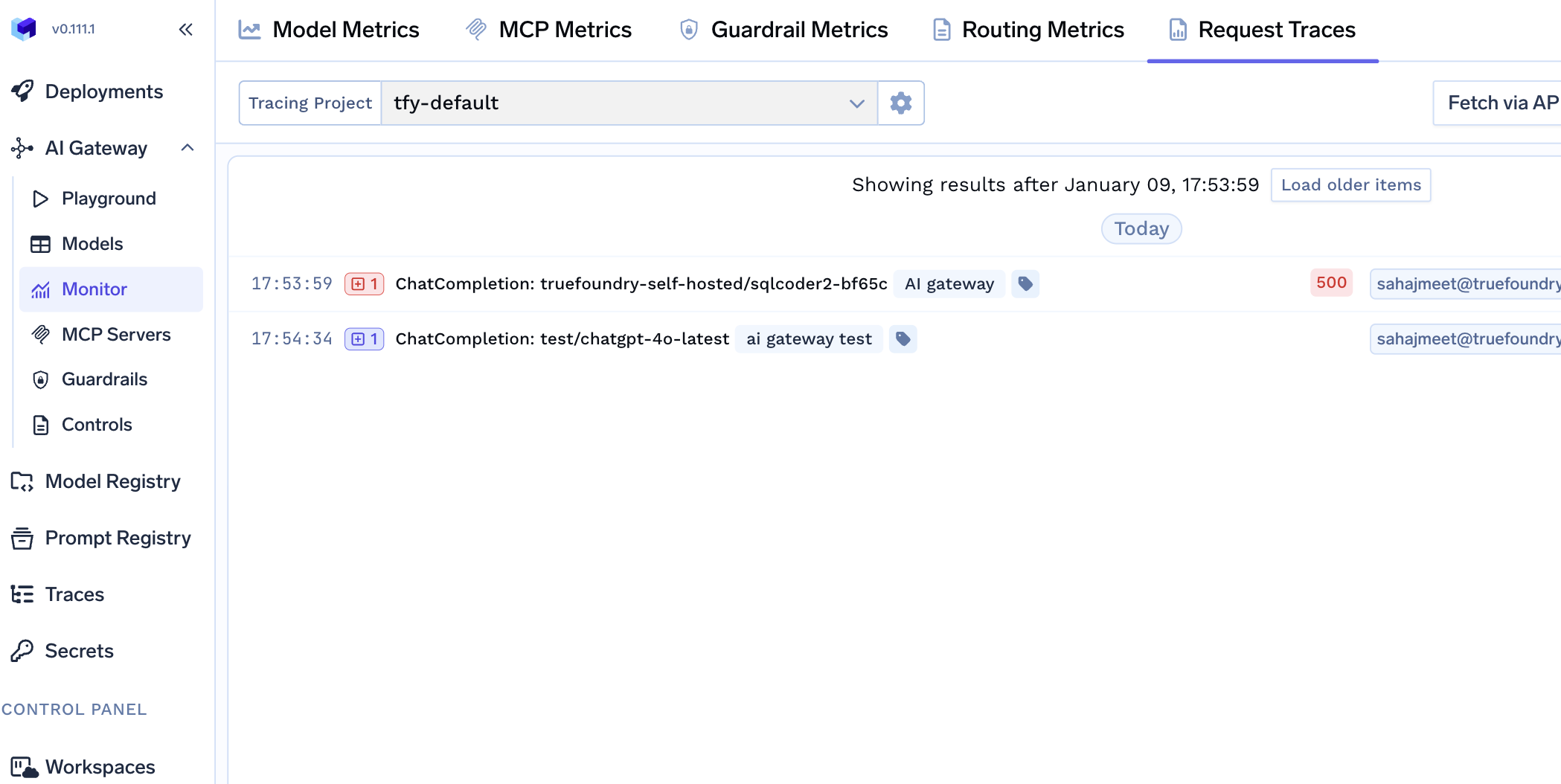

Residency guarantees are only meaningful if they can be verified and demonstrated. TrueFoundry enables enterprises to validate data residency through runtime visibility and auditability, not post-hoc assumptions.

Runtime Enforcement Visibility

The AI Gateway provides visibility into:

- Which model endpoint handled a request

- Which region the execution occurred in

- Whether any retry or fallback paths were triggered

This allows teams to confirm that every execution path remained compliant.

Audit-Ready Logs and Traces

For compliance and security reviews, TrueFoundry surfaces:

- Structured logs showing routing and execution decisions

- Region metadata associated with inference and agent actions

- Evidence that non-compliant paths were blocked

This makes it possible to prove residency during audits, rather than relying on architectural diagrams alone.

Testing Residency Under Failure Conditions

A key advantage of gateway-level enforcement is testability.

Enterprises can:

- Simulate regional outages

- Observe failover behavior

- Validate that requests fail closed rather than rerouting cross-region

This turns residency from a static requirement into a continuously verifiable system property.

Conclusion

In modern AI systems, data residency cannot be ensured by deployment choices alone. Dynamic routing, retries, agent workflows, and observability pipelines all introduce execution paths where data can silently cross regional boundaries.

The AI Gateway is the only layer with sufficient context to prevent this. It sees every inference request, every retry, every agent action, and every trace emitted by the system. If residency is not enforced here, it cannot be enforced consistently anywhere else.

In TrueFoundry, data residency is treated as a runtime system property. Execution paths are constrained by design, exception cases fail closed, and enforcement is observable and auditable. This makes residency guarantees resilient not just during steady state, but under failure, scale, and change.

For enterprises deploying AI in regulated or multi-region environments, that distinction matters. Data residency is no longer a checkbox it’s an architectural commitment. And the AI Gateway is where that commitment becomes real.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.

.jpg)