Amazon SageMaker AI Pricing: A Detailed Breakdown and Better Alternative

Introduction

For many engineering teams, Amazon SageMaker is the default starting point for machine learning on AWS. It offers a comprehensive ‘lab-in-a-box’ experience that removes the need to manage underlying servers.

However, as teams move from experimental notebooks to full-scale model deployment, the monthly bill is not in sync with reality. The convenience of ‘managed ML’ comes with a premium that can silently erode margins.

This guide discusses specific components of SageMaker pricing, exposes the markup on compute instances, and explains why cost-conscious enterprises are looking for alternatives.

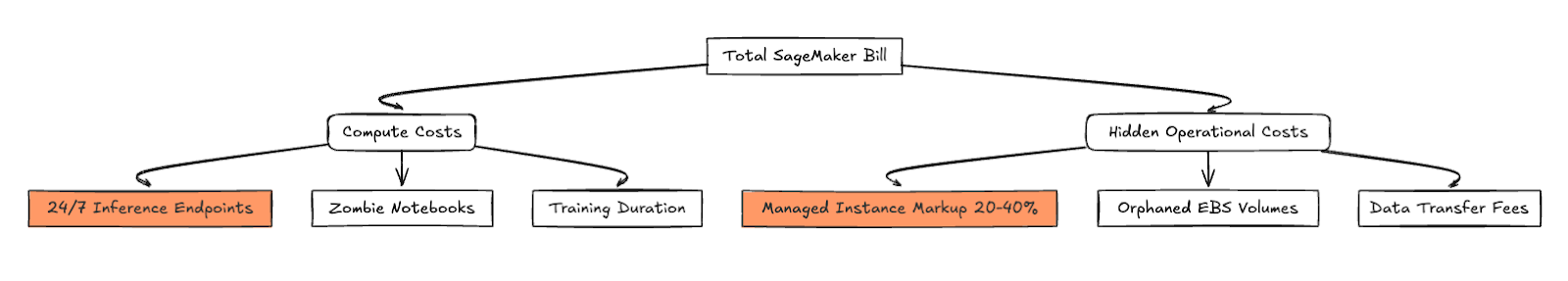

The SageMaker Pricing Model: Computed by Component

SageMaker does not bundle pricing into a single plan. Instead, you pay separately for every stage of the ML lifecycle. We will then explain the cost breakdown:

Studio Notebooks (Development Costs)

SageMaker Studio notebooks are often mistaken for lightweight text editors. In reality, they are billed as running compute instances.

- Hourly Billing: Developers pay an hourly rate for the instance backing each Jupyter notebook session.

- The "Zombie" Problem: These instances are frequently left running overnight or on weekends. A developer who forgets to shut down a notebook on Friday afternoon will generate billable hours for the entire weekend.

- Hidden Drain: Without strict auto-shutdown policies, idle development environments become "zombie notebooks" that silently rack up SageMaker costs without contributing any value.

Training Jobs (Model Building Costs)

Training costs are calculated based on the specific instance type used and the duration the resources remain active.

- Setup Time is Billable: You pay for the full duration of the training clusters, including the time spent spinning up the cluster and loading data, not just the time the GPU is crunching numbers.

- Instance Size Matters: Larger instance types (required for deep learning or LLMs) dramatically increase hourly costs.

- Data Transfer: Pulling massive datasets from S3 into training instances often incurs overlooked data transfer charges that appear as separate line items.

Inference Endpoints (Deployment Costs)

For most production teams, inference is the "iceberg" of Amazon SageMaker pricing—accounting for 70–80% of the total bill.

- Always-On Billing: Real-time inference endpoints are billed 24/7, regardless of whether anyone is querying the model.

- Scaling Costs: To handle peak traffic, teams often over-provision resources. Because SageMaker autoscaling can be conservative, you end up paying for idle capacity just to ensure availability.

The “Managed Service Premium” Behind SageMaker Pricing

One of the biggest drivers of high AWS SageMaker cost is the managed-service markup. While you are technically using Amazon EC2 instances under the hood, SageMaker wraps them in a management layer and charges a premium for it.

Comparing “ml.” Instances vs Standard EC2

- SageMaker instances (prefixed with ml.) typically cost 20–40% more than the equivalent raw EC2 instances, despite running on identical hardware.

- Here is a simplified look at how the markup compounds:

- Note: Prices are estimates based on US East (N. Virginia) on-demand rates and are subject to change.

What You are Paying For

The premium isn't arbitrary; AWS charges this fee because they handle the heavy lifting:

- OS patching and health checks.

- Driver updates (CUDA, etc.).

- Service orchestration and endpoint management.

For small teams without platform engineers, this is a fair trade. However, at scale, this convenience premium transforms into a recurring tax on infrastructure usage that provides diminishing returns.

Other Hidden Costs You Might Have To Pay

Beyond the headline compute rates, several operational inefficiencies inflate the final SageMaker bill. These costs are often discovered only after the finance department flags a budget overrun.

Storage Overhead from EBS Volumes

Every notebook and training job attaches an Elastic Block Store (EBS) volume by default to store data and code.

- Orphaned Storage: When a notebook instance is terminated, the attached EBS volume is not always automatically deleted.

- Silent Accumulation: These volumes persist, creating "orphaned storage" fees that accumulate quietly without active monitoring.

Multi-Model Endpoint Limitations

Multi-Model Endpoints (MMEs) promise cost savings by allowing you to host multiple models on a single container.

- The Latency Trap: In practice, loading models into memory on demand causes "cold start" latency.

- The Reversion: To fix user experience issues, teams often revert to dedicated endpoints, reintroducing high, always-on infrastructure costs.

Limited Spot Instance Support for Inference

AWS Spot Instances offer up to 90% discounts, but they are risky for inference in SageMaker.

- Reliability Issues: SageMaker lacks robust, native fallback mechanisms to instantly switch to On-Demand instances if a Spot node is reclaimed.

- Forced Premium: To ensure reliability, teams are forced to rely on expensive On-Demand instances for production inference, missing out on massive potential savings.

Why SageMaker Pricing Becomes Hard to Control at Scale?

As your AI adoption grows, SageMaker pricing becomes increasingly difficult to forecast and optimize.

The costs are distributed across notebooks, training jobs, endpoints, storage, and monitoring (CloudWatch). Because budget controls operate at the AWS account level, it is difficult to attribute specific costs to a specific model or research team. This lack of visibility leads to idle resources and over-provisioned endpoints that inflate monthly spend indefinitely.

Why Scaling Teams Handle Their Compute In-House

There is often a clear tipping point where SageMaker’s managed premium becomes unsustainable.

When monthly AI spend crosses the $10k–$20k threshold, the markup becomes impossible to ignore. At this stage, engineering leaders typically seek raw infrastructure pricing without the managed overhead. Advanced teams want granular control over GPU types, reserved instances, and savings plans—optimizations that are often restricted or more complex within the SageMaker ecosystem.

TrueFoundry: A Better Alternative To SageMaker

TrueFoundry offers a fundamentally different approach by separating orchestration from infrastructure ownership.

Instead of reselling you compute with a markup, TrueFoundry orchestrates workloads directly on your own EKS (Kubernetes) and EC2 clusters.

- Zero Markup: You pay raw AWS infrastructure rates. TrueFoundry charges for the platform, not a percentage of your compute.

- Automated Savings: Idle resources are automatically shut down using utilization-based policies, eliminating zombie notebooks.

- Reliable Spot Inference: TrueFoundry enables reliable inference on Spot Instances by maintaining a small on-demand buffer and handling interruptions gracefully, significantly reducing production costs.

Amazon SageMaker vs TrueFoundry: Cost Structure Comparison

This comparison focuses on unit economics rather than feature parity.

Choosing the Right Platform for Long-Term AI Scale

AI infrastructure decisions should align with long-term cost and operational goals.

Amazon SageMaker is ideal for:

- Small teams or solo data scientists.

- Getting started quickly with minimal setup.

- Projects where the speed of deployment outweighs the cost of infrastructure.

TrueFoundry is ideal for:

- Scaling AI applications that require predictable costs.

- Teams spending over $10k/month on cloud compute.

- Enterprises that require infrastructure flexibility and want to avoid managed-service premiums.

Ready to Stop Paying the Markup?

SageMaker is excellent for early experimentation, but long-term AI success requires cost discipline. If your cloud bill is growing faster than your model performance, it’s time to rethink your infrastructure strategy.

TrueFoundry gives you the power of a managed platform with the economics of raw compute.

FAQs

How much does SageMaker cost?

SageMaker pricing varies by region and usage. You pay separately for compute instances (per hour), storage (GB/month), and data transfer. Instance prices range from a few cents per hour for basic CPUs to over $28/hour for advanced GPU instances.

How to reduce SageMaker costs?

To reduce AWS SageMaker costs, ensure you shut down idle notebook instances, use Spot Instances for training jobs, right-size your inference endpoints, and delete unattached EBS volumes. Alternatively, moving to an orchestration platform like TrueFoundry can eliminate the managed service markup entirely.

Is SageMaker free on AWS?

SageMaker offers a Free Tier for the first two months, which includes limited hours of notebook usage, training, and inference on small instance types. Once these limits are exceeded, standard pricing applies.

How is TrueFoundry more cost-effective than Amazon SageMaker?

TrueFoundry is more cost-effective because it allows you to run workloads on your own cloud account using standard EC2 instances, avoiding the 20-40% markup SageMaker charges. It also provides automated features to shut down idle resources and reliably use Spot Instances for inference.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.